The tech giant Microsoft said there is no chance of super-intelligent artificial intelligence being created within the next 12 months and cautioned that the technology could be decades away.

Earlier this month, the board of directors at OpenAI removed Sam Altman as the CEO. However, he was quickly put back in the position after a weekend of strong protests from the company’s employees and shareholders.

Also read: Apple Watch Series 9 (Product) announced (Red)

Last week, Reuters was the first to share that the removal happened right after scientists reached out to the board, alerting them about a concerning finding that they worried might lead to unexpected problems.

The startup’s secret project called Q* (pronounced Q-Star) might be a game-changer in their quest for what they call artificial general intelligence (AGI), according to an insider. AGI, as defined by OpenAI, refers to self-operating systems that outperform humans in most tasks that are economically valuable.

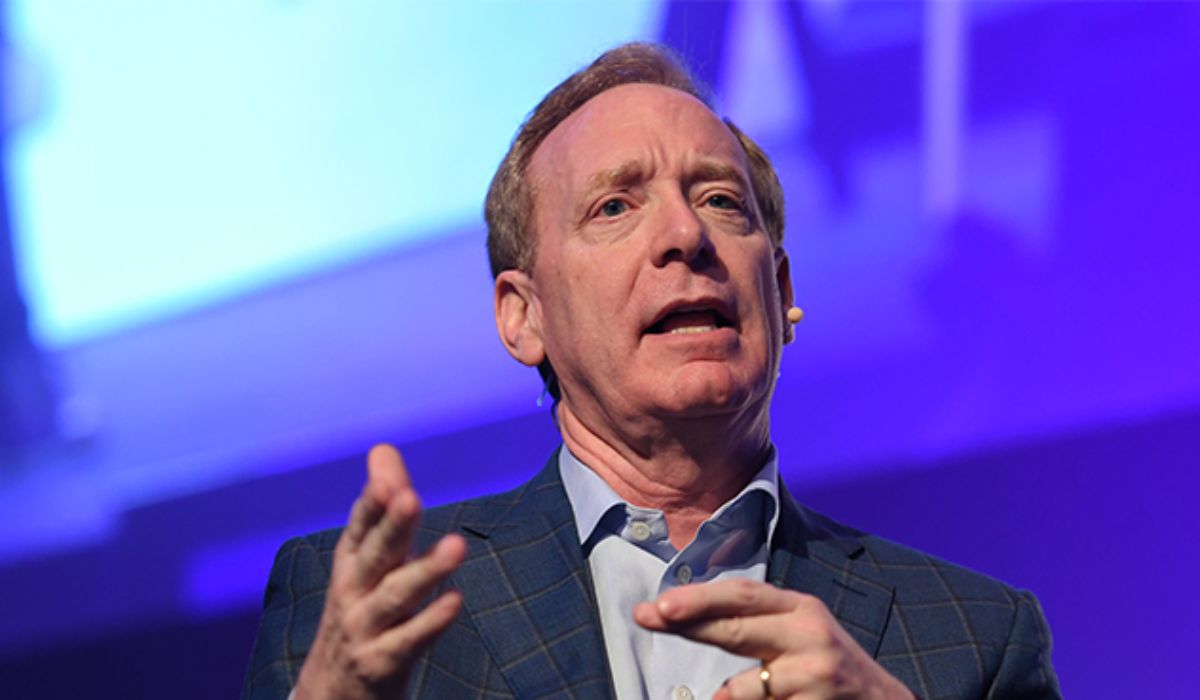

However, Microsoft President Brad Smith, speaking to reporters in Britain on Thursday, rejected claims of a dangerous breakthrough.

“There’s absolutely no probability that you’re going to see this so-called AGI, where computers are more powerful than people, in the next 12 months. It’s going to take years, if not many decades, but I still think the time to focus on safety is now,” he said.

Insiders informed Reuters that a caution to the board of OpenAI played a role in Altman’s dismissal, alongside various other complaints. These included worries about advancing technologies for commercial purposes without first evaluating their potential risks.

When Brad Smith was questioned about whether the revelation played a part in Altman’s dismissal, he replied, “I don’t believe that’s true. There was certainly a difference of opinion between the board and others, but it wasn’t primarily about a worry like that.”

“What we really need are safety brakes. Just like you have a safety break in an elevator, a circuit breaker for electricity, an emergency brake for a bus – there ought to be safety breaks in AI systems that control critical infrastructure, so that they always remain under human control,” Smith added.